Database Setup Checklist: From Development to Production

Setting up a database isn't just about clicking "install" and hoping for the best. It's a multi-step process that, if done incorrectly, can lead to performance issues, security vulnerabilities, and costly downtime. Here's what you need to know:

- Separate Environments: Keep development, testing, and production environments distinct to avoid cross-environment risks.

- Automate Setup: Use tools like Infrastructure as Code (IaC) to ensure consistent, error-free configurations.

- Secure Production Data: Mask or anonymize sensitive data before using it in non-production environments.

- Schema Design: Plan both logical and physical schemas carefully to balance performance and scalability.

- Version Control: Treat database changes like code - track, test, and document every update.

- Access Controls: Implement Role-Based Access Control (RBAC) and enforce least privilege principles.

- Data Encryption: Protect data at rest and in transit using strong encryption standards like AES-256.

- Regular Audits: Conduct security checks and vulnerability scans to stay ahead of risks.

- Backups and Recovery: Automate backups and test recovery plans to minimize data loss during failures.

- Performance Monitoring: Use indexing, query optimization, and real-time tracking to prevent bottlenecks.

Deploy to Production & Database Implementation (You Choose!, Ch. 2, Ep. 4)

Environment Setup and Configuration

Setting up your database environments the right way can save you from countless headaches down the road. By creating distinct spaces for development, testing, and production, you can keep your data safe, your team efficient, and your workflow smooth.

Setting Up Separate Environments

Having separate environments for development, testing, and production isn’t just a best practice - it’s essential. A development environment should be a sandbox where your team can experiment freely without fear of breaking anything important. Testing environments, on the other hand, need to closely resemble production to catch issues that could otherwise slip through. And production? That’s where stability and security take center stage.

Here’s why this matters: studies show that 84% of application stakeholders deal with major production issues caused by database change errors. Additionally, 57% of application updates require database schema changes, and 88% of teams spend over an hour fixing schema-related problems. As Kendra Little from Redgate explains:

"Dev and test environments should be resilient - but the best form of resilience is the ability to quickly reset and/or spin up new databases in the case of a failure or error".

For staging environments, aim to replicate production as closely as possible. Match hardware, network configurations, and data volumes to ensure this environment serves as an accurate final checkpoint before deployment. Using separate organizational structures, such as distinct projects or accounts in cloud setups, can help control costs, enforce access policies, and reduce the chances of accidental cross-environment impacts. Automating these processes adds an extra layer of consistency and security.

Automating Environment Setup

Setting up environments manually is risky - it’s prone to errors and inconsistencies. That’s where automation comes in. With Infrastructure as Code (IaC), you can turn your setup into a repeatable, version-controlled process, ensuring every environment starts on the same solid foundation.

Automation pays off big time. Companies using tools like Liquibase Enterprise report deploying database changes 80% faster, while Datical users have cut database errors by 90%. Automate repetitive tasks like provisioning, schema deployment, and configuration. Database Release Automation (DRA) takes it a step further by packaging and deploying changes across environments consistently. Testing your automation scripts early - with built-in logging and error tracking - helps identify and fix issues quickly, ensuring all environments are configured securely and uniformly.

Copying Production Data Safely

Using production data in non-production environments can improve testing but also opens the door to security and compliance risks. In fact, 54% of organizations have experienced data breaches in non-production environments, and 52% have failed compliance audits as a result.

To mitigate these risks, techniques like data masking, pseudonymization, and anonymization are key. These methods protect sensitive data while maintaining its structure for realistic testing. Static data masking, which irreversibly protects sensitive information, is trusted by 97% of organizations. Start by defining a clear data security policy that outlines how production data can be duplicated and how long it can remain in non-production environments. Only copy the data you absolutely need for testing.

Entity-based data masking ensures referential integrity and consistency across related tables. Automated tools can scan datasets for sensitive information and apply the right protection methods. For development environments, consider using synthetic data instead of production data. Tools like lakeFS allow you to create isolated data branches for testing, which can be removed once changes are merged into production. This approach strikes a balance between effective testing and strong data protection, ensuring productivity while meeting compliance standards.

Schema Design and Documentation

A well-thought-out schema lays the groundwork for performance, scalability, and easier maintenance.

Designing Logical and Physical Schemas

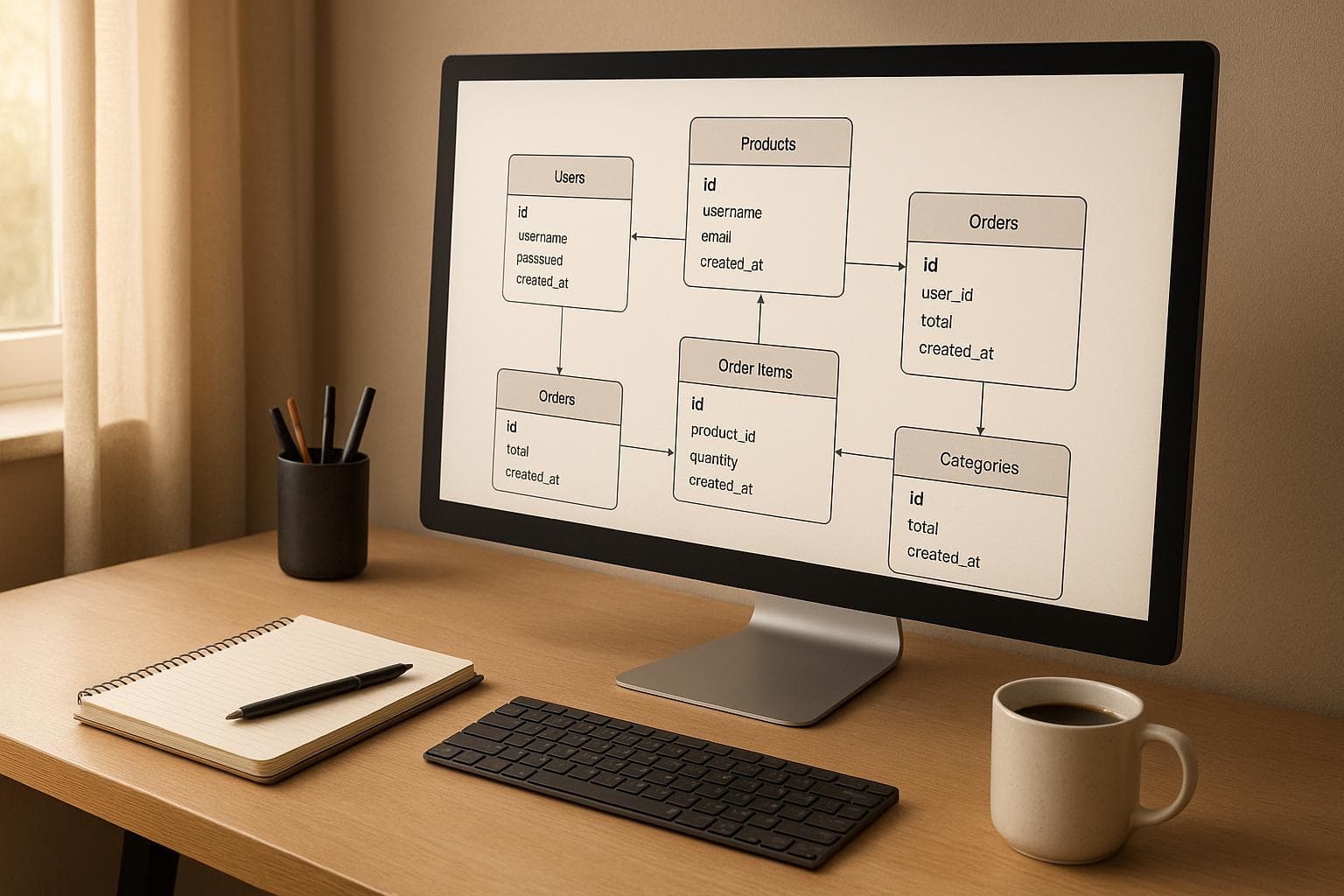

Creating a schema starts with analyzing your data requirements and translating them into a structure that functions effectively both logically and physically. A logical schema defines the data you store and how it relates, while a physical schema focuses on the efficient storage and retrieval of that data.

Start by using an Entity-Relationship Diagram (ERD) to map out data relationships. This visual tool can help you spot potential issues early. For instance, Twitter partitions tweets and user interactions across multiple database servers using sharding, enabling it to scale horizontally and retrieve data efficiently. Similarly, Amazon DynamoDB ensures high availability and durability by replicating data across multiple Availability Zones.

Strive for a balance between normalization and denormalization. Normalization reduces redundancy, but denormalization can improve performance when needed. The goal is to maintain data integrity without sacrificing query speed.

When designing a physical schema, factor in storage and performance needs. Use indexing, partitioning, and clear naming conventions to optimize queries and simplify maintenance. As your data and user base grow, plan for scalability by incorporating strategies like data partitioning, replication, caching, and load balancing. Use indexes judiciously and monitor query performance regularly. To ensure data consistency, enforce integrity with primary keys, foreign keys, and constraints.

Managing Schema Changes with Version Control

Once your schema is in place, managing changes effectively becomes crucial. Database schema changes are inevitable, but they don’t have to be chaotic. Treat schema version control with the same importance as application code version control. Research shows that top-performing DevOps teams are 3.4 times more likely to include database change management in their workflows, and about half of all significant application changes involve database updates.

There are two main approaches to database version control: state-based and migration-based. In the state-based method, you define the ideal database state and use tools to generate SQL scripts. In contrast, the migration-based approach explicitly tracks each change. Be cautious with state-based migrations, as relying solely on automated tools can sometimes result in destructive changes.

Establish a clear versioning system, like semantic versioning, to track major, minor, and patch updates. Write incremental and reversible migration scripts to allow for rollbacks, and maintain a detailed change log for auditing purposes. Integrate version control with your CI/CD pipeline to automate migrations and deployments. Always test changes in a staging environment before rolling them out to production. Schedule updates during low-impact times to minimize disruptions. Smaller, frequent schema changes with automated validation can reduce downtime, and having a rollback plan is essential.

Keeping Documentation Current

Schema changes should go hand-in-hand with up-to-date documentation to keep your team aligned. Without proper documentation, teams can waste valuable time reverse-engineering database logic instead of focusing on meaningful tasks. Current schema documentation is vital for understanding the database structure, improving collaboration, and aligning with business goals.

Your documentation should include key resources like schema diagrams, data dictionaries, and user guides. Make it clear and accessible so all stakeholders can understand and update it easily. Store documentation in a shared location for team-wide access.

Automation can simplify this process. Generate documentation directly from your database to ensure accuracy, and integrate it with tools like Git to track changes alongside schema updates.

| Documentation Component | Purpose | Update Frequency |

|---|---|---|

| Schema Diagrams | Visualize table relationships | After each schema change |

| Data Dictionary | Define fields and constraints | Real-time or daily |

| User Guides | Provide operational instructions | Monthly or as needed |

Leverage tools like DbSchema to streamline documentation. It supports over 70 databases and offers Git-based change tracking, interactive HTML5 documentation, and automated scripts. You can export documentation in formats like HTML, PDF, CSV, and JSON.

Regular reviews ensure your documentation evolves with your business needs. By tying documentation updates to schema changes, you make maintenance, troubleshooting, and future updates easier while reducing errors.

Security and Compliance

Protecting your database is more than just good practice - it's a necessity. With the average cost of a data breach reaching $4.88 million in 2024, up 10% from the previous year, the stakes have never been higher.

Setting Up Access Controls

Start with the principle of least privilege, ensuring users only have access to what they absolutely need. This is where Role-Based Access Control (RBAC) comes into play. Instead of assigning permissions to individuals, create roles tailored to specific job functions and assign permissions to those roles.

To implement RBAC effectively:

- Conduct a thorough assessment of your data, processes, and systems.

- Assemble a team of experienced business analysts to gather RBAC requirements from each department.

- Define roles that align with your business needs and processes.

Add an extra layer of protection by enforcing multi-factor authentication (MFA) alongside strong password policies. For sensitive operations, adopt Just-In-Time (JIT) access, granting temporary permissions only when needed. For instance, a developer might receive elevated access for a maintenance task, with those permissions automatically revoked afterward. Regular access reviews are also crucial to identify outdated permissions and prevent privilege creep.

Don't overlook secure onboarding and offboarding processes. Quickly grant or revoke access as employees join, change roles, or leave the company. Test RBAC roles in a staging environment to ensure they meet access requirements, and establish a maintenance plan to adjust roles as your business evolves.

Once access is under control, focus on protecting the data itself with encryption.

Implementing Data Encryption

Encryption is your frontline defense for safeguarding data, whether it's stored in your database or traveling across networks. But it's not just about encrypting - it's about doing it right and managing it effectively.

Use AES-256 for symmetric encryption and RSA (2048-bit minimum) for asymmetric encryption, steering clear of outdated algorithms. Encryption at rest ensures that even if physical devices are compromised, the data remains secure. Encryption in transit protects data moving through networks from interception and eavesdropping.

For data in transit, implement TLS/SSL protocols to create secure communication channels between your applications and databases. End-to-end encryption (E2EE) ensures that data remains encrypted from its origin to its destination.

Key management is just as important as encryption itself. Use hardware security modules (HSMs) or cloud-based key management systems (KMS) to store keys securely. Avoid hardcoding keys into your application code; instead, use secure storage solutions like environment variables or vaults to retrieve keys dynamically at runtime. Regularly test your system to understand how encryption impacts performance, especially when dealing with large datasets or limited resources.

To further secure your encryption systems, protect them with strong passwords and multi-factor authentication, maintaining a layered approach to security.

Regular Security Audits and Vulnerability Checks

Security isn't a one-and-done task. Regular audits and vulnerability checks are essential to identify potential weaknesses before attackers do. Data theft and leaks account for 32% of all cyber incidents, making proactive monitoring critical.

Start by identifying the regulations that apply to your organization, such as GDPR, CCPA, HIPAA, or PCI DSS. In 2023 alone, GDPR fines in the EU totaled €2.1 billion, while HIPAA violations in the US led to over $4.1 million in penalties.

Classify your sensitive data based on its regulatory requirements and business impact. This includes personal information (PII), financial records, and intellectual property. Use this classification to prioritize your audits and compliance efforts.

Implement continuous user activity monitoring with logging and auditing systems. Track logins, data access patterns, and administrative actions to create an audit trail that not only helps detect suspicious activity but also supports compliance reporting. Test your incident response plan regularly to ensure you're prepared to detect and recover from security incidents.

Audit high-risk systems quarterly and lower-risk ones annually. Monitor emerging trends like AI-driven automated risk assessments and real-time monitoring, as well as the rise of zero-trust frameworks, which require verification for every access request regardless of user location or credentials.

Finally, automate vulnerability scanning to keep pace with rapidly evolving threats. Prioritize patching based on the severity of the threat and your specific risks. Document your findings and remediation efforts to show regulators your commitment to compliance and track your progress over time.

sbb-itb-687e821

Data Migration and Backup Setup

Data migrations often face challenges, leading to financial losses of up to $100 million annually. To sidestep these issues, success hinges on careful planning, rigorous testing, and reliable backup strategies.

Planning and Testing Data Migrations

A well-executed data migration involves three critical testing phases: pre-migration, migration, and post-migration. Each phase plays a vital role in ensuring the process runs smoothly and the data remains intact.

Pre-migration testing is all about preparation. Start by evaluating your source and target systems, mapping data fields, and identifying any compatibility challenges. Use testing environments that closely mimic your production setup, establish baseline performance metrics, and choose the right migration tools. Most importantly, develop a solid backup plan before making any changes to live data.

During the migration phase, follow the plan closely. Monitor transfer rates, address errors immediately, and validate both performance and functionality in real time.

Post-migration testing ensures everything works as it should. Conduct thorough data validation, test the performance of the new system, verify data integrity, and check your rollback procedures. Include backward compatibility tests and user acceptance tests to confirm the system meets all requirements.

When deciding on a migration approach, weigh the pros and cons. A Big Bang Migration offers a quick transition but comes with higher risks. On the other hand, a Phased Approach breaks the process into smaller, more manageable steps, reducing risks and minimizing disruptions. For instance, Paylocity used Fivetran to speed up its CRM migration from Microsoft Dynamics to Salesforce by 92%, centralizing data into Google BigQuery for analysis. Similarly, YipitData streamlined operations by automating data ingestion from over 20 SaaS platforms into Databricks, reducing the need for multiple Amazon Redshift clusters.

Platforms like newdb.io simplify data migration by supporting multiple formats such as SQL, CSV, JSON, and XLSX. This flexibility makes transitioning from older systems more straightforward and less technically demanding.

Once a migration plan is in place, the focus shifts to setting up dependable backup and recovery systems to safeguard data integrity.

Setting Up Automated Backups and Recovery

With ransomware attacks projected to rise by 700% by 2025 and the average cost of such incidents hitting $4.4 million, automated backup and recovery systems are no longer optional - they’re essential. These systems should minimize downtime and data loss while ensuring consistency during recovery.

Start by defining system-specific Recovery Time Objectives (RTOs) and Recovery Point Objectives (RPOs). RTOs specify the maximum allowable downtime, while RPOs determine the acceptable amount of data loss. Tailor these objectives to each system’s importance, focusing resources on critical applications and using cost-effective solutions for less essential systems.

For real-time protection, implement Continuous Data Protection (CDP) to replicate workloads as they change. Combine snapshot-based backups with replication for faster recovery. To guard against ransomware and insider threats, use immutable backups stored in a write-once-read-many (WORM) format, which prevents unauthorized modifications or deletions - even by hackers or internal users.

Incorporate application-specific failover mechanisms aligned with your recovery goals into your DevOps processes. Predictive monitoring tools can help identify potential failures or performance issues before they escalate. Regularly test your disaster recovery plans and simulate emergency scenarios to uncover gaps and ensure your team is ready for real-world incidents. Given that 82% of breaches involve human error or misuse, training staff on emergency procedures is a must.

Tracking Migration Progress with Logs

To complement your migration and backup efforts, effective logging is critical for tracking progress and resolving issues quickly. Monitoring key performance indicators (KPIs) helps measure success, align migration efforts with business goals, and optimize resource use [28].

Focus on data quality by tracking error-free transfers and ensuring consistency between the source and target systems [28]. Set up automated alerts to flag issues when error rates exceed acceptable thresholds, enabling you to address problems early.

Keep an eye on timelines and budgets. Large-scale migrations involving hundreds of gigabytes of data can take hours to complete. Compare actual progress against your plan to identify bottlenecks and make adjustments as needed.

System performance metrics are equally important. Monitor uptime, response times, and resource usage during migration windows to minimize disruption to users and business operations.

For lift-and-shift migrations, use Data Diff Monitors to compare source and target systems continuously. This ensures data parity before retiring the old system. In ongoing replication scenarios, schedule these monitors to match replication intervals, reducing the risk of misaligned reporting.

Comprehensive logging tools, project management software, and business intelligence platforms can simplify tracking and documentation. Clearly define metrics, automate data collection where possible, and maintain a regular monitoring schedule. Document findings and remediation efforts to build a knowledge base for future migrations and demonstrate accountability to stakeholders.

Performance Optimization and Monitoring

Optimizing database performance is crucial, especially when downtime costs can average a staggering $5,600 per minute.

Adding Indexes to Key Columns

Once your environments and schema are set up, the next step is fine-tuning performance through targeted indexing. Indexing can transform system performance. For example, IBM's FileNet P8 repository saw transaction response times drop from 7,000 milliseconds to just 200 milliseconds after indexing the UBE06_SEARCHTYPE column. CPU usage also dropped significantly - from 50-60% to just 10-20% - proving how impactful strategic indexing can be.

To start, identify frequently executed queries and focus on indexing their key columns. These often include primary keys, foreign keys, and columns used in WHERE, JOIN, and ORDER BY clauses.

Choose the right type of index for the job:

- Clustered indexes: Organize table data to improve range searches.

- Non-clustered indexes: Act as pointers for frequently queried columns.

- Composite indexes: Cover multiple columns, ideal for queries that consistently involve several fields together.

However, more indexes don’t always mean better performance. Over-indexing can slow down write operations. Focus on indexing columns that are often queried but rarely updated. For columns that are updated frequently, filtered indexes can be a smarter choice, as they target specific subsets of data.

Keep an eye on index health using tools like MySQL's EXPLAIN or SQL Server's Query Execution Plan. Watch out for index fragmentation, which can degrade performance over time. If fragmentation occurs, you may need to rebuild or reorganize your indexes.

Tracking Performance Metrics

Effective monitoring means focusing on metrics tailored to your database type. Here’s a breakdown:

| Database Type | Key Metrics | Why They Matter |

|---|---|---|

| MySQL/MariaDB | InnoDB buffer pool usage, Slow query log, Table locks | Uncover bottlenecks unique to MySQL. |

| PostgreSQL | VACUUM frequency, Bloat, Tuple stats | Addresses PostgreSQL's MVCC-specific needs. |

| MongoDB | Document scan ratio, WiredTiger cache, Oplog window | Tracks MongoDB's document-oriented performance. |

| Redis | Keyspace misses, Evictions, Fragmentation | Memory management is critical for Redis. |

| Elasticsearch | Indexing latency, Search latency, JVM heap usage | Focuses on search engine resource demands. |

Beyond database-specific metrics, monitor query performance (execution time, slow query counts, and throughput), resource usage (CPU, memory, disk I/O, and network I/O), and connection metrics (active connections, connection pool usage, and wait times). Measure throughput and latency to understand transactions per second, average response times, and how the system handles peak loads.

Set up real-time alerts for critical thresholds. For instance, if CPU usage exceeds 80% or slow queries spike, immediate notifications can help you act fast. Automate responses where possible, such as scaling resources when certain limits are reached.

Use historical data to establish performance baselines. This allows you to distinguish between temporary spikes and long-term performance issues. Analyzing trends over time can help you detect and address gradual performance declines before they escalate.

Platforms like newdb.io simplify monitoring by offering built-in tools that track key metrics automatically, reducing the complexity of setup and ensuring continuous visibility into your database's health.

Load Testing and Failover Planning

Optimizing your database isn’t just about queries and metrics; it’s also about ensuring reliability under pressure. Load testing and failover planning are essential steps for production readiness, especially when high-traffic events can cripple unprepared systems. Consider this: a 14-hour outage once cost $90 million.

Load Testing: Incorporate load testing early in development - not just before major releases. Start by testing normal traffic levels, then gradually increase the load to find breaking points. Simulate different scenarios like sustained high traffic, sudden spikes, or gradual increases. Stress testing pushes the system to its limits, helping you identify bottlenecks before users are affected.

Failover Planning: Build redundancy at multiple levels. Use load balancing to distribute traffic, replicate data across different locations, and implement fault tolerance mechanisms that reroute traffic away from failures. Regularly simulate failures - disable primary instances or disconnect networks - to ensure your failover systems work as expected. During these tests, verify data integrity to confirm consistency across all locations.

Achieving "five nines" availability (99.999%) - which translates to just 5.26 minutes of downtime per year - requires robust monitoring, automated failover, and consistent disaster recovery testing.

For added resilience, consider hybrid database strategies. Combining relational and NoSQL databases can help distribute workloads more effectively, with each system optimized for specific tasks.

Performance optimization isn’t a one-time task - it’s an ongoing process of monitoring, testing, and refining. By staying proactive, you can ensure your database remains reliable and efficient, even under the most demanding conditions.

Conclusion: Building Reliable Database Infrastructure

Creating a dependable database infrastructure is more than just ticking off a checklist - it's about laying the groundwork for sustainable growth. The strategies discussed in this guide, like isolating environments and keeping a close eye on performance, work together to minimize risks like outages and data loss. Each step reinforces the next, ensuring a solid, secure, and efficient system.

The most successful database setups share a few key traits: detailed documentation, automation to reduce human error, and proactive monitoring to catch problems before they escalate. Keeping documentation current not only simplifies troubleshooting but also keeps your team on the same page. Meanwhile, automation ensures consistency across environments, enhancing reliability.

A well-planned setup leads to reduced downtime, quicker issue resolution, and predictable performance. Companies that adopt thorough database practices often experience noticeable gains in both stability and efficiency.

Platforms such as newdb.io can help by automating tasks like environment provisioning, enforcing security protocols, and monitoring performance. These tools lighten the manual workload and ensure consistent processes, which becomes increasingly important as your systems grow more complex.

FAQs

Why is it important to use separate environments for development, testing, and production when setting up a database?

Using distinct environments for development, testing, and production is crucial for maintaining system reliability, data protection, and efficient operations. Each environment plays a unique role: development is where ideas take shape and features are built, testing is where bugs are caught and resolved, and production is where everything runs live for end users.

Keeping these environments separate helps prevent unintended changes or security issues in the live system. Developers can experiment with new features without affecting real users, while the testing phase ensures everything works as expected before going live. This structure reduces mistakes, strengthens security, and makes the transition from development to production smooth and dependable.

How does Infrastructure as Code (IaC) help create consistent and secure database environments?

Infrastructure as Code (IaC) simplifies the creation of consistent database environments by automating the setup process. This ensures that development, staging, and production environments are configured exactly the same, minimizing errors caused by manual setup or configuration drift.

IaC also boosts security by enabling version control for configuration files. With a clear record of changes, you can quickly roll back faulty updates and reduce the likelihood of human mistakes. By automating deployments and maintaining an audit trail, IaC not only protects your database environments but also streamlines operations for better efficiency.

What are the best practices for managing database schema changes to maintain stability and optimize performance?

To manage database schema changes effectively while maintaining stability and performance, here are some practical tips:

- Make small, incremental updates: Breaking changes into smaller, manageable updates reduces the risk of errors and minimizes downtime.

- Use version control systems: Keep a detailed record of all schema changes in version control. This makes it easier to track modifications and collaborate with your team.

- Test in a staging environment: Before rolling out changes, thoroughly test them in a staging setup to catch potential issues early.

- Prepare a rollback plan: Always have a rollback strategy ready. This ensures you can quickly revert changes if something goes wrong.

It’s also important to evaluate how these changes might affect database performance and availability. Keeping stakeholders informed throughout the process can help ensure a seamless transition.