SQL Indexing vs Partitioning for Large Data

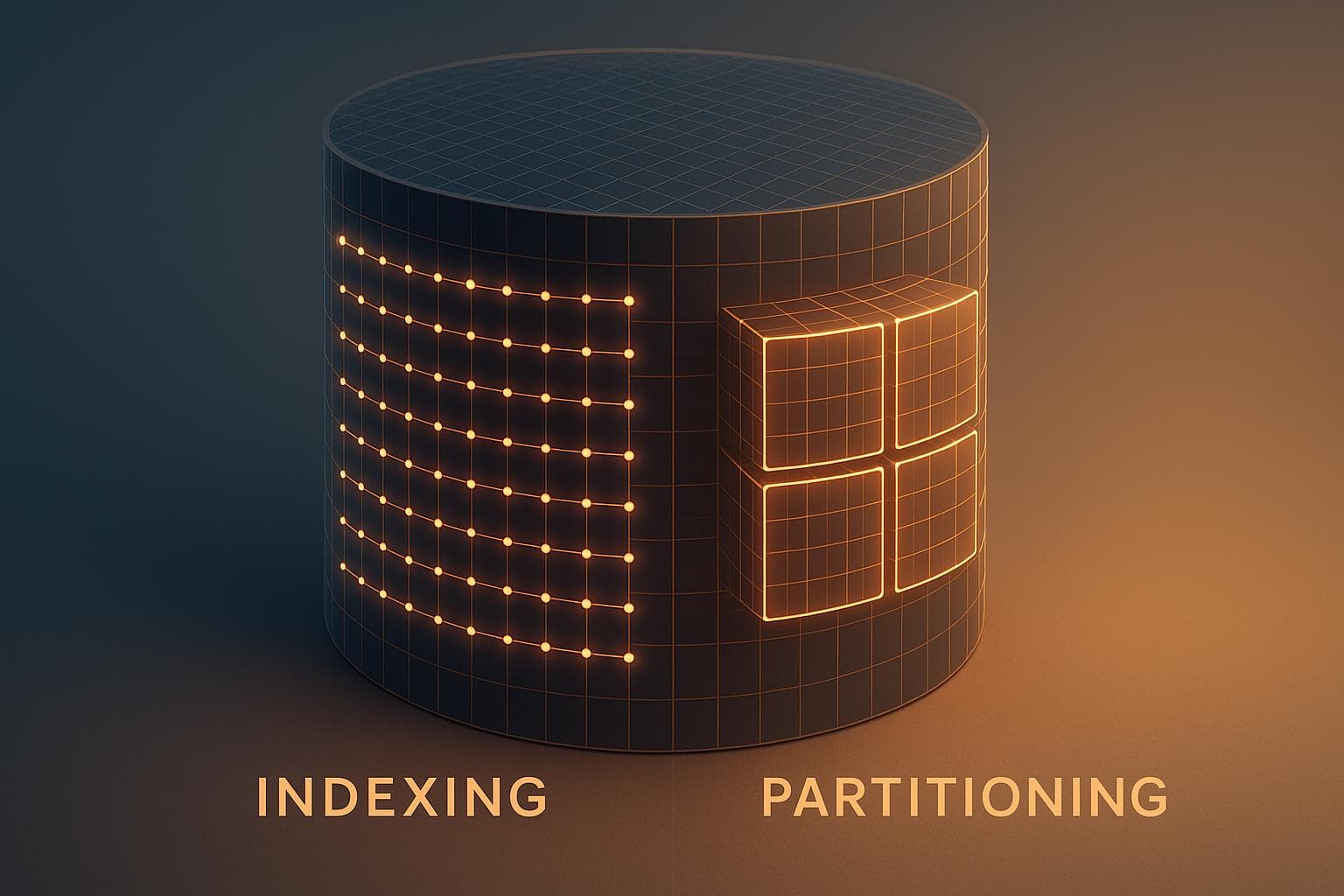

When dealing with massive datasets, SQL indexing and partitioning are two powerful methods to improve query performance and manage data efficiently. Here’s the key takeaway:

- Indexing helps locate specific data quickly by creating structured maps, ideal for point lookups and range queries.

- Partitioning splits large tables into smaller chunks, making it easier to process large-scale scans or manage time-series data.

Use indexing for transactional systems with diverse queries. Leverage partitioning for analytical workloads or massive datasets, especially when queries align with partition keys. For the best results, combine both techniques: partition tables logically and apply targeted indexes within each partition.

Quick Overview:

- Indexes improve query speed but require extra storage and maintenance.

- Partitioning simplifies managing large tables but adds setup complexity.

- Both methods can work together to optimize performance in cloud databases.

Let’s dive deeper into how these methods work and when to use each.

Indexing & Partition in SQL | SQL Masters | Euron

What Is SQL Indexing

SQL indexing helps databases quickly locate specific data, avoiding the need to scan an entire table.

SQL Indexing Basics

An index is essentially a separate data structure that acts like a roadmap, pointing to the exact location of data within a table. The most common types of indexes are B-tree indexes and clustered indexes.

-

B-tree indexes: These organize data into a tree-like structure, making it easier to narrow down searches. They're ideal for tasks like finding exact matches (e.g.,

WHERE user_id = 12345) or performing range queries (e.g.,WHERE created_date BETWEEN '2024-01-01' AND '2024-12-31'). - Clustered indexes: These work differently by sorting and physically storing the table's rows based on key values. The table and the index are essentially merged, with the actual data stored in the index's leaf nodes. Because the table's rows can only be sorted one way, a table can only have one clustered index.

- Non-clustered indexes: Unlike clustered indexes, these create a separate structure that links back to the rows in the table. A single table can have multiple non-clustered indexes, each optimized for different types of queries.

How Indexes Speed Up Queries

Indexes dramatically improve query performance by minimizing the number of rows the database needs to check. Without an index, the database performs a sequential scan, examining each row one by one. With an index, it uses more efficient methods like binary search to quickly pinpoint relevant data.

Clustered indexes are especially useful for range-based queries. For example, if you're searching for records within a specific date range, a clustered index on the date column ensures that the matching rows are stored together, making retrieval much faster.

Downsides of Indexing

While indexes can significantly boost query performance, they aren't without drawbacks. Each index takes up extra storage space and can slow down write operations, as the database must update the index every time data is added, modified, or deleted.

Indexes also need regular maintenance. Over time, as data changes, indexes can become fragmented, which can hurt performance. Rebuilding or reorganizing indexes periodically helps maintain their efficiency. Additionally, poorly designed indexes can waste resources if they're rarely used or don't align with query patterns.

Indexes are a powerful tool for speeding up data retrieval by narrowing down searches. In the next section, we'll dive into how partitioning takes optimization a step further by splitting data into manageable chunks for better processing.

What Is SQL Partitioning

SQL partitioning is a technique that breaks down large tables into smaller, more manageable pieces called partitions.

SQL Partitioning Basics

Partitioning works by dividing a table based on specific criteria, creating multiple segments that can be processed separately while still appearing as a single table to users and applications.

There are several ways to partition data:

- Range Partitioning: Divides data based on a range of values, such as splitting sales data by months or years.

- List Partitioning: Groups records according to predefined values, like geographic regions.

- Hash Partitioning: Distributes rows evenly when no natural grouping exists.

Modern versions of SQL Server allow up to 15,000 partitions per table or index by default, a notable improvement over earlier versions, which were limited to 1,000 partitions. Each partition requires a minimum of 40 pages (320 kilobytes) for efficient storage allocation.

Next, let’s explore how partitioning can enhance performance and simplify data management.

Partitioning Benefits

Partitioning offers several advantages, particularly for query performance and data management:

- Improved Query Performance: Partitioning enables partition elimination, where the database optimizer skips over irrelevant partitions when filtering on the partitioning column. This reduces the amount of data scanned.

- Efficient Data Management: Large-scale operations, such as loading, deleting, or archiving data, can be performed quickly by switching entire partitions in or out of a table.

- Simplified Maintenance: Maintenance tasks like rebuilding indexes or compressing data can focus on individual partitions instead of the entire table. Partitioning also supports tiered storage strategies, where frequently accessed data resides on faster storage, while older data is moved to cost-effective options.

- Enhanced Scalability: Data can be distributed across multiple filegroups or servers, reducing contention and avoiding single points of failure. Partition-level lock escalation further minimizes contention in high-traffic environments.

While partitioning provides many advantages, it also introduces certain complexities, as discussed below.

Partitioning Problems

Despite its strengths, partitioning is not without challenges. Here are some of the key drawbacks:

- Increased Complexity: Managing partitioned tables adds layers of complexity to tasks like backups, restores, monitoring, and balancing workloads.

-

Query Limitations: Queries that don’t filter on the partitioning column may scan all partitions, leading to worse performance compared to non-partitioned tables. As database expert Gail Shaw points out:

"Partitioning can enhance query performance, but there is no guarantee."

- Uneven Data Distribution: Poorly chosen partition keys can create "hot partitions", which may require frequent rebalancing. Cross-partition operations, such as joins or referential integrity checks, can also be inefficient and may need extra application-level logic to aggregate results.

- Memory Constraints: Systems with limited memory, such as 16 GB of RAM, may struggle to handle a large number of partitions during memory-intensive operations.

- Row Updates: Changing the value of a row's partitioning column triggers a delete-and-insert operation, which is slower and can increase storage wear.

- Schema Management: Schema changes don’t always propagate seamlessly across all partitions, often requiring manual adjustments that can lead to errors.

Balancing these trade-offs is crucial, especially when comparing partitioning with indexing, which we’ll cover in the next section.

sbb-itb-687e821

Indexing vs Partitioning Comparison

Indexing and partitioning each bring unique advantages to managing large datasets. While indexing enhances performance for selective queries, partitioning is better suited for large-scale scans. Let’s dive into how these methods compare in terms of workload performance and maintenance needs, helping you decide which fits your requirements.

Performance for Different Workloads

For read-heavy workloads, indexing is a go-to solution for selective queries. By creating direct paths to specific rows, indexes eliminate the need to scan entire tables. This makes them ideal for point lookups and range queries on indexed columns.

On the other hand, partitioning performs exceptionally well in analytical scenarios, especially when queries involve scanning large sections of data based on time ranges or categories. Partition elimination allows databases to skip irrelevant sections entirely, cutting down on I/O operations and improving efficiency.

Write-heavy workloads present unique challenges. Indexes can slow down performance since every INSERT, UPDATE, or DELETE requires updating all related indexes. The more indexes a table has, the higher the maintenance overhead during data modifications.

With partitioning, write performance improves if new data is consistently added to recent partitions, such as in time-series datasets. Here, writes are focused on fewer partitions, reducing contention. However, modifying partition keys can be costly, requiring a delete-and-insert process across partitions that can significantly impact performance.

For mixed workloads, a balanced approach works best. Indexes handle transactional queries effectively, while partitioning supports analytical operations that scan large datasets.

Setup and Maintenance Requirements

The complexity of setup and maintenance varies significantly between the two methods. Indexes are relatively simple to create but demand ongoing upkeep. Partitioning, on the other hand, requires more planning upfront but offers a more predictable maintenance process.

Index maintenance can disrupt application availability, especially during large index rebuilds, which consume significant resources. While online index operations help minimize downtime, they often require extra storage space.

Partitioning setup is more involved, requiring you to define partition functions, schemes, and filegroups before implementation. However, once in place, ongoing maintenance is straightforward. For example, adding new partitions for time-based data follows a routine process, and archiving old data can be handled efficiently with partition switching instead of costly bulk deletes.

Storage management is another key difference. Indexes add storage overhead, often requiring 50–100% more space depending on the number of indexed columns and table size. Partitioned tables, however, enable smarter storage strategies, such as keeping recent partitions on high-performance SSDs while moving older partitions to more economical storage options.

When to Use Each Method

Your choice between indexing and partitioning depends on your data access patterns and operational needs. Here’s a quick comparison:

| Aspect | Indexing | Partitioning |

|---|---|---|

| Best For | OLTP systems, diverse query patterns | Massive tables, time-series data |

| Storage Overhead | High (50–100%) | Minimal; supports tiered storage |

| Maintenance Complexity | Regular rebuilds required | Complex initial setup, easier ongoing maintenance |

| Scalability | Limited by index size and memory | Handles extremely large datasets effectively |

Choose indexing for applications with diverse query patterns or OLTP systems that rely on frequent point lookups. Opt for partitioning when working with massive tables, time-series data requiring regular archiving, or analytical workloads where queries align with partition keys.

Avoid over-indexing in write-heavy environments, as it can slow down data modifications. Similarly, avoid partitioning for smaller tables or when queries don’t align with partition boundaries - it can add unnecessary complexity without clear benefits.

In many cases, combining both methods yields the best results. For instance, large partitioned tables can use indexes within each partition to strike a balance, particularly in cloud-based SQL environments like those supported by newdb.io. This hybrid approach ensures flexibility and performance for complex workloads.

Best Practices for Large Data in Cloud SQL

Efficiently managing large datasets in Cloud SQL requires a thoughtful approach that balances techniques like indexing and partitioning. While cloud platforms offer powerful tools for handling massive data volumes, success hinges on using the right strategies to maintain performance over time.

Using Indexing and Partitioning Together

One of the best ways to handle large datasets is by pairing indexing with partitioning. These methods work hand-in-hand to make data more accessible and queries faster. Instead of treating them as separate options, you can partition your large tables into smaller, more manageable chunks and then apply indexes within each partition.

For example, partitioning tables based on access patterns - like breaking time-series data into monthly partitions - can significantly improve query efficiency. If your queries often target recent data, this setup ensures that only a small number of partitions are queried.

Within each partition, you can create targeted indexes on the most frequently queried columns. This keeps the index sizes smaller while still speeding up query performance, as partitioning naturally limits the amount of data each index covers.

For workloads with a mix of queries, consider using a clustered index on the partition key alongside non-clustered indexes on other important columns. This combination helps optimize both partition filtering and specific lookups. Regularly reviewing query execution plans ensures that your indexing and partitioning strategies continue to meet performance needs.

Cloud Database Solutions

Modern cloud database platforms simplify many challenges of managing large datasets by offering built-in optimization features and automation tools. These platforms handle much of the heavy lifting, allowing developers to focus on fine-tuning their indexing and partitioning strategies.

Take newdb.io as an example. This platform provides production-ready SQL databases with instant setup and global distribution. Built on libSQL and Turso, it delivers strong performance and scalability, making it ideal for applications that deal with large datasets. Its user-friendly interface eliminates much of the complexity of provisioning, letting developers concentrate on optimization.

Cloud platforms also shine when it comes to automatic scaling and resource management. As your partitioned tables grow, these systems can allocate additional storage and computing power automatically. This reduces the need for manual capacity planning and helps prevent performance bottlenecks during high-demand periods.

Global distribution ensures low-latency access, no matter where your users are located.

Developer-friendly tools like visual data editors, automated backups, and seamless support for multiple data formats (SQL, CSV, JSON, XLSX) make database management smoother. Integration with tools like Prisma ensures that your optimization strategies fit seamlessly into your existing application workflows.

Ongoing Monitoring and Tuning

Managing large datasets isn’t a one-and-done task - it requires consistent monitoring and adjustments as data grows and usage patterns shift. What works for a smaller dataset may falter at scale, so keeping an eye on performance metrics is critical.

Start by setting performance baselines. Track metrics such as query response times, partition usage, and index efficiency. Many cloud platforms offer built-in dashboards to make this process easier. Alerts for performance drops can help you address issues before they affect users.

Reviewing query execution plans regularly can reveal new patterns or inefficiencies that need attention. Automating tasks like partition creation and archival can help maintain performance while controlling costs.

Index maintenance is another key area. Scheduling maintenance tasks like index rebuilds during low-traffic times can minimize their impact. Many cloud platforms allow temporary resource scaling during these windows, ensuring maintenance doesn’t disrupt overall performance.

Finally, keep an eye on resource usage trends. By understanding how your data and workload evolve, you can make smarter scaling decisions and keep your system running smoothly over time.

Conclusion

When working with large datasets, you don’t have to choose exclusively between indexing and partitioning. Each serves a distinct purpose, and the best results often come from using them together in a way that aligns with your data structure and workload.

Indexing is ideal for speeding up specific queries and point lookups. However, as indexes grow in size, they require more maintenance and can sometimes slow down write operations. On the other hand, partitioning is particularly effective for massive datasets that can be logically divided. It reduces the amount of data scanned and supports efficient parallel processing.

For organizations handling large datasets in cloud environments, a combination of these techniques often delivers the best performance. Start by partitioning tables based on access patterns, then add targeted indexes within each partition. This approach keeps index sizes manageable while ensuring fast query performance. Since indexes on partitioned tables exist within each partition, you can reindex specific partitions as needed without affecting the entire dataset.

To maintain system performance, leverage automated partition management tools and perform routine maintenance tasks like VACUUM and ANALYZE.

FAQs

How do I choose between indexing, partitioning, or both for optimizing my SQL database performance?

When deciding between indexing, partitioning, or using both, it all comes down to the size of your data and how your queries are structured.

- Indexing is great for speeding up specific queries, like point lookups or filtering smaller, frequently accessed data subsets. That said, don’t overdo it - too many indexes can bog down write operations, so use them wisely.

- Partitioning shines when dealing with massive datasets. By breaking tables into smaller, more manageable chunks, it boosts query performance with techniques like partition pruning and parallel processing. Plus, it makes maintenance tasks a lot easier.

For large-scale databases, combining both strategies can be a game-changer. Partitioning limits the amount of data scanned, while indexing speeds up pinpoint searches within those partitions. To figure out the best fit, take a close look at your query patterns and data scale to strike the right balance.

What mistakes should I avoid when using partitioning in a SQL database?

When setting up partitioning in a SQL database, there are a few common missteps that can cause performance problems or make management unnecessarily complicated. One of the biggest issues is selecting the wrong partition key. A poorly chosen key can lead to uneven data distribution, also known as data skew, which often results in sluggish query performance. Another frequent mistake is neglecting to properly manage partition sizes - when partitions grow too large, queries can slow down significantly.

Overcomplicating the database design is another trap to avoid. A design that's too intricate can make maintenance harder and increase the likelihood of errors creeping in. Additionally, partitioning can add overhead to your system, especially if your database doesn't have the memory or resources to handle it smoothly. To avoid these issues, it's crucial to test your partitioning strategy using actual data and workloads. This ensures it supports your performance and scalability requirements effectively.

What are the differences between indexing and partitioning, and how do they improve the performance of large SQL databases like newdb.io?

Managing large datasets efficiently often comes down to two key techniques: indexing and partitioning. These methods are essential for improving database performance and ensuring smooth data operations.

Indexing works like a roadmap for your data. By creating an organized structure for specific columns, it allows queries to locate information much faster. This is particularly useful for frequently accessed data or when running complex queries that could otherwise take a long time to process.

Partitioning, on the other hand, breaks massive datasets into smaller, more manageable chunks. These segments can then be processed in parallel, speeding up query response times. Partitioning also boosts scalability, as it spreads data across multiple nodes, keeping the database efficient and responsive even as data grows.

When combined, these techniques enable cloud-based SQL databases like newdb.io to handle large-scale operations seamlessly. The result? Faster performance and the ability to scale effortlessly for modern applications.